This is a guest post by Tim Jones, founder at Launchmatic, an iOS & Android app screenshot design automation tool, and ex-Growth Manager at Keepsafe Photo Vault.

There’s no arguing that the Apple Rating Prompt delivers amazing results. According to an analysis done by Apptentive, apps that implemented the rating prompt saw their daily rating count increased by 32x with 90% of apps also showing a 20% increase in average star rating. The catch? It’s a black box of user engagement, with the only trackable data being your output metric of ratings & reviews.

Where’s the data, Apple?!

I really hope you get this reference

The lack of engagement data makes it impossible to run simultaneous experiments as there is no way to differentiate performance between user cohorts. This means you’re firing in the dark, hoping you hit as many engaged users as possible.

This is a difficult pill to swallow for teams that prioritize behavior based product & design decisions. It was no different during my time at Keepsafe.

Our early implementation of the Rating Prompt showed a significant uptick in ratings but a decline in reviews. We wanted to get a better sense for how users were interacting with the prompt so we could optimize for better performance.

That’s where the Fake Prompt comes in.

DISCLAIMER: What follows is against the updated Apple Guidelines. Keepsafe and myself no longer employ this experiment and do not condone it.

Which one is the fake?

The Fake Prompt

We created the Fake Prompt to answer 4 questions on our hunt to find the best implementation:

👤 Who should see the prompt?

⌚ When in the user life-cycle to show the prompt?

📍 Where during the session should the prompt pop up?

🔂 Cadence at which the prompt will be shown?

We needed to experiment with the prompt integration across multiple cohorts in order for us to find the best performing for each. You can learn more about our implementation framework in my How to Increase Ratings & Reviews with the iOS Rating Prompt post.

In order for our results to accurately depict performance, we duplicated the UI/UX of the iOS Rating Prompt. Creating an identical experience ensures that the data we acquire during the smoke test will stay true once we move back to the native prompt.

We then added event based tracking to help us determine the following performance metrics:

- Conversion (successful submission) of a rating

- Conversion (successful submission) of a review

- Average stars of a rating & review

- Character count of reviews by rating

- Performance of each individual prompt

Fake Prompt Performance

We used the Fake Prompt to test many hypotheses. The data below is collected from our best performing variant, which has long been reverted back to the native prompt.

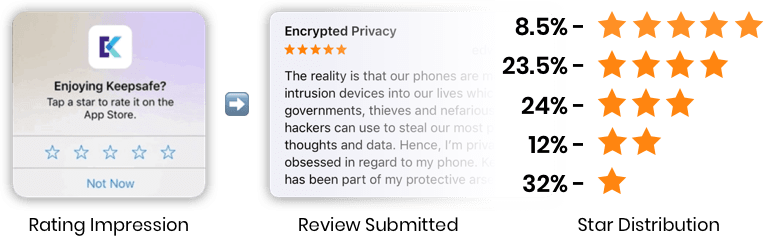

Conversion to a rating

Users converted at 13.5% to a submitted rating with a vast majority of ratings being 5 stars. This resulted in a total average rating of 4.7 stars.

This further proves that the rating prompt is a fantastic tool for quality rating acquisition at scale.

Conversion to a review

Users converted at only 0.07% to a review when shown the prompt. That means, for every 1000 people who see the prompt only 7 will actually leave a review. The star distribution for these reviews is also highly negative with 68% being a 1–3 star review.

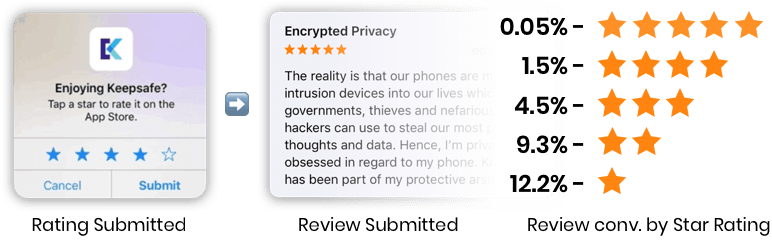

Conversion from a submitted rating to a review

Users who leave a bad rating through the Prompt, while rare, are much more prone to leaving a bad review. Below shows the conversion rate distribution from a star rating to a review:

Users who leave a 1 star rating end up converting to a 1 star review at 12.2%, while 5 star ratings will only convert to a review at 0.05%. While you should be receiving a lot more positive ratings than bad, if you’ve had problems with negative ratings you should expect many of them to convert into negative written reviews.

Bad reviews are bad, good reviews are also bad

We determined the strength of a review by its total character count. We found that users who left negative (1–3) reviews wrote short essays while positive (4–5) didn’t have much substance.

Negative app reviews from the iOS Ratings Prompt have 3x the character count as positive reviews.

Median character count of reviews

1 star reviews coming from the prompt were 6x larger than 5 star reviews with many 5 star reviews being just 1–2 words.

With Apple seemingly featuring app reviews at random, I am sure character count, keywords, and quantity among other variables helps with this determination. A nuisance for those chasing a high conv. rate.

Summary

The iOS Rating Prompt is the surefire way to acquire high ratings but don’t count on it if you’re looking for reviews.

The average star rating and average review rating that we were able to get for Photo Vault align with data pulled from the top 100 iOS apps in the US and are an accurate depiction of what you can expect.

Some fun facts that didn’t make the write-up:

- Some of our experiments took months to complete due to the longer interval between prompts

- We use Amplitude for event tracking and Appbot.co for Rating/Review performance tracking

- We added logic to the fake prompt so that it would not show at least 7 days after a crash, and we saw a small but significant increase in avg. rating

- The iOS Rating Prompt converted to a submitted rating at 13.5%, while our custom rating prompt (prior to release of iOS prompt) only had a CTR of 0.8%